Five scenarios are devised,

ranging from the absence of any policy that mitigates greenhouse gas emissions

to a stringent policy constraining the total atmospheric concentration of all

greenhouse gases to a relatively low level by the year 2100. The model projects probabilities for limiting

further global temperature increases for each scenario. For example, in the absence of any abatement policy

temperatures are likely to increase by 3.5ºC to 7.4ºC above the level of

1981-2000 by the decade 2091 to 2100.

Emission limits of increasing stringency not only lower predicted mean temperature

increases but also project decreased probabilities especially for the largest

temperature changes.

Prinn further

presents an economic risk analysis that shows that investing early in

mitigation minimizes future economic harms arising from extreme weather and

climate events that further warming generates.

The financial return on this class of investments is high.

Long-term increases

in global temperatures originate from human activities in most developed and

developing countries around the world. All

these countries should unite to adopt emission abatement policies to minimize

further global warming and its harmful consequences.

Introduction. Climate

models have been used for several decades (see the previous post

) as an important tool to make predictions concerning the expected behavior of

the global climate. The models include General

Circulation Models, which seek to account for interactions of atmospheric

currents above the earth’s surface and oceanic currents to project future

climate development. The important

feature of these models is their incorporation of past and future increases in

atmospheric greenhouse gases (GHGs), especially those emitted as a result of

human activity. Application of these

models has resulted in predictions, under various emission scenarios, of

increased global warming in future decades, and of the harmful climatic and

meteorological effects arising from this warming over this time period.

A group working at

Massachusetts Institute of Technology and elsewhere has focused on the science

and policy of global change. One member

of their group, Ronald Prinn recently published “Development and Application of

Earth System Models” (Proc. Natl. Acad. Sci., 2013, Vol. 110, pp. 3673-3680)

. His article is a follow-up to, and

builds on, an earlier work from this same group (A. P. Sokolov et al.

(including Prinn as a co-author), J. Climate, 2009, vol. 22, pp. 5175-5204). The present post summarizes the methods and

results of Prinn and the MIT climate group.

Earth system models expand on general circulation models by incorporating

many facets of worldwide human activity that impact on greenhouse gas

emissions, the warming of the planet and the changes predicted as a result of

these effects. Prinn and the other

authors in the MIT group, in developing their Integrated Global System Model

(IGSM), seek to account for the growth in human population, the changes in

their economic activity that will demand expanded energy supplies, and

greenhouse gas emissions arising from these human activities. The structure of their model is summarized at

the end of this post in the Details section.

The IGSM results are expressed in terms of probabilities or probability distributions. This is because the calculations incorporated into the model include starting values for both climatic and economic parameters that are selected by a random process; the calculations are then repeated several hundred times and the results are assembled into graphs of probabilities or frequencies of occurrence of a given value of the output. (These are similar to histogram bar charts, which are used when the number of data points is small. In the probability distributions each value, for example of temperature increase, has an associated frequency of occurrence, which varies as the temperature value moves across an essentially continuous range.)

IGSM forecasts for temperature changes are shown in the graphic below for a “no mitigation policy” case (others call this “business-as-usual”), which leads to an atmospheric concentration of CO2 and equivalent contributions from other greenhouse gases of 1330 parts per million (ppm) CO2 equivalents (CO2-eq) in the decade 2091-2100. The forecasts also include mitigation policies of increasing stringency which are modeled to constrain GHGs to 890, 780, 660 and 560 ppm CO2-eq.

Probability

distribution of the modeled increase in temperature from the baseline period

1981-2000 to the decade 2091-2100. The

caption inside the frame shows first the “no mitigation policy” case, then

cases of increasingly rigorous mitigation policies; the probability distribution

curves for the same cases proceed from right to left in the

graphic. The term “ppm-eq” is the same

as the term “CO2-eq” defined in the text above. Each distribution has associated with it a

horizontal bar with a vertical mark near its center. The vertical mark shows the median modeled

temperature increase, whose value is shown immediately to the right of the

legend line in the graphic (e.g. 5.1ºC for the no mitigation policy case). The horizontal line designates values of the

temperature increase for each model that range from a 5% probability of

occurrence to a 95% probability, shown inside the parentheses in the graphic

(e.g. 3.3-8.2ºC for the no mitigation policy case). 1ºC corresponds to 1.8ºF.

Source: Prinn , Proc.

Natl. Acad. Sci., 2013, Vol. 110, pp. 3673-3680; http://www.pnas.org/content/110/suppl.1/3673.full.pdf+html?with-ds=yes.

Several features are noteworthy in the

graphic above. First, of course, more

stringent abatement policies lead to lower stabilization temperature

increases because the atmosphere contains less GHGs than for more lenient

policies. Equally as significant, the breadth

of each frequency distribution is less as the abatement policy becomes more

stringent. This means that within each

frequency distribution, the likelihood of extreme deviations toward the

occurrence of warmer temperatures is reduced as the stringency of the policies

increases. In other words, the 95%

probability point (right end of each horizontal line) is further from the

median (vertical mark) for the no mitigation policy case than for the others,

and this difference gets smaller as the stringency increases from right to left

in the graphic. Furthermore, Prinn

states “because the mitigating effects of the policy only appear very

distinctly …after 2050, there is significant risk in waiting for very large

warming to occur before taking action”.

The Intergovernmental Panel on Climate

Change (IPCC) has promoted the goal of constraining the increase in the

long-term global average temperature to less than 2ºC (3.6ºF), corresponding

to about 450 ppm CO2-eq. Prinn

points out that because significant concentrations of GHGs have already

accumulated, the effective increase in temperature is already 0.8ºC (1.4ºF) above the pre-industrial level. His analysis shows it is virtually impossible

to constrain the temperature increase within the 2ºC goal for the four least

stringent policy cases by the 2091-2100 decade, and the policy limiting CO2-eq

to 560 ppm has only a 20% likelihood of restricting the temperature increase to

this value.

Economic costs

of mitigation are estimated

using the IGSM component modeling for human economic activity. Prinn establishes a measure of global welfare

as being assessed by the value of a percent of global consumption of goods and

services, and estimates that this grows by 3% per year. So, for example, if the welfare cost due to

spending on mitigation is estimated at 3%, this means that global welfare change

would be set back by one year.

The economic cost

of imposing mitigation policies is estimated using a cap and trade pricing

regime, graduated with time. To attain

the goals discussed in Prinn’s article by the 2091-2100 decade, compared to

economic activity for no mitigation policy, the two least stringent policies

have very low probabilities for causing loss of global welfare greater than 1%;

the probability of a welfare cost of 1% reaches 70% only for the most stringent

policy, stabilization at 560 ppm. The

probability for exceeding 3% loss in welfare is essentially zero for the three

least stringent cases, and even for the 560 ppm policy the probability is only

10%. Thus foreseeable investments in

mitigation lead to minimal or tolerable losses in global welfare.

Based on the

graphic shown above and other information provided in the article that is not

summarized here, Prinn implicitly infers that the increases in long-term global

average temperatures foreseen carry with them sizeable worldwide socioeconomic

harms. For this reason, he concludes “[investment

in mitigation] is a relatively low economic risk to take, given [that the most

stringent mitigation policy of] 560 [CO2-eq] … substantially lower[s]

the risk for dangerous amounts of global and Arctic warming”. He emphasizes that this statement assumes

imposition of an effective cap and trade regime as mentioned here.

The MIT Earth

System Model. The work of Prinn, Sokolov, and the rest of

the MIT climate group is important for its integration of climate science and

oceanography with human activity as represented by economic and agricultural

trends. In this way the prime driver of

global warming, man-made emissions of GHGs, is accounted for both in the

geophysical realm and in the anthropological realm.

Prinn’s work is

cast in probabilistic terms, providing a sound understanding of likely

temperature increase in five emissions scenarios. Projections of future global warming are

essentially descriptions of probabilities of occurrence of events.

Human activity

is increasing atmospheric GHGs. The atmospheric concentration of CO2

for more than 1,000 years before the industrial revolution was about 280

ppm. Presently, because of mankind’s

burning of fossil fuels, the concentration of CO2 has risen to greater

than 393 ppm, and is increasing annually.

The IPCC has set a goal (which many now fear will not be met) of

limiting the warming of the planet to less than 2ºC above the pre-industrial

level, corresponding to a GHG level of about 450 ppm CO2-eq. It is clear from Prinn’s article that this

limit will most likely be exceeded by 2100.

Most of the other

GHGs shown in the graphic in the Details section (see below) are only man-made;

they were nonexistent before the industrial revolution. Methane (CH4) is the principal GHG

other than CO2 which has natural origins. Human use of natural gas, which is methane,

and human construction of landfills, which produce methane, have led to

increases in its atmospheric concentration.

Prinn’s

temperature scenarios are already apparent in historical data. Patterns

shown in the graphic above for the modeled probability distribution of future

temperature increases have already been found to be happening in recent times. Hansen and coworkers (Proc. Natl. Acad. Sci., Aug . 6,2012) documented very similar shifts to larger temperature increases of global

decade-long average temperatures in the decades preceding 2011 (see the graphic

below).

Source: Proceedings

of the [U.S.

The graphic is

presented in units of the standard deviation from the mean value, plotted along

the horizontal axis. The black curve

shows the frequency distribution for purely random events. Decadal average temperatures were evaluated

for a large number of small grid areas on the earth’s surface. The data for all the grid positions were

aggregated to create the decadal frequency distributions. Using rigorous statistical analysis the

authors showed that, compared to the base period 1951-1980, the temperature

variation for each of the decades 1981-1990, 1991-2000, and 2001-2011, shifted successively

to higher temperatures. The distributions

for the most recent decades show that more and more points had decadal average

temperatures that were much higher (shifted toward larger positive standard

deviation values, to the right) compared to the distributions from the earlier base

period. The recent decades also deviate

strikingly from the behavior expected for a random distribution (black curve). Hansen’s analysis of historical grid-based

data suggests that the warming of long-term global average temperatures

projected by the work of Prinn and the MIT group is already under way.

Risk-benefit

analysis supports investing in mitigation. As global warming

proceeds, the extremes of weather and climate it produces wreak significant

harms to human welfare; these will continue to worsen if left unmitigated. Prinn has used risk analysis to show that the

economic loss arising from delayed welfare gains, due to investing in

mitigation efforts, is far less than the economic damage inflicted by

inaction. In other words, according to

Prinn’s analysis, investing in mitigation policies has a high economic return

on investment.

Worldwide

efforts to mitigate GHG emissions are needed. GHGs once emitted are

dispersed around the world. They carry

no label showing the country of origin.

The distress and devastation caused by the extreme events triggered by

increased warming likewise occur with equal ferocity around the world. Planetary warming is truly a global problem,

and requires mitigating action as early as possible by all emitting countries

worldwide. As Prinn points out, humanity

enhances its risks of harm by waiting for very large warming to occur before

embarking on mitigating actions.

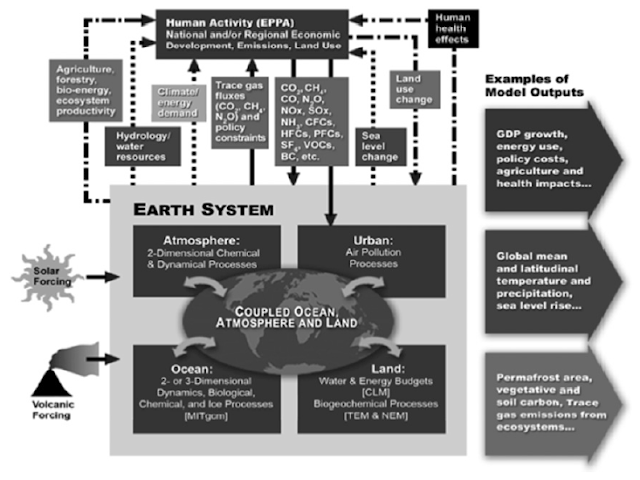

Details

The Integrated

Global System Model is a large scale computational system in which a macro

scale Earth System includes within its structure four interconnected modules

which themselves include computations for the respective properties of the

modules (see graphic below and its legend).

Schematic depiction

of the Integrated Global System Model.

The light gray rectangle, the Earth System, comprises the complete

computational model system. Within the

Earth System are four computational submodels, the Atmosphere, Urban

(accounting for particulates and air pollution most prevalent in cities), the

Ocean and the Land. These are

computationally coupled together, accounting for climatic interactions among

them, or they can be run independently as needed. The Earth System receives inputs from and

delivers outputs to Human Activity (at top); solid lines indicate coupling

already included in the IGSM, and various dashed and dotted lines indicate

effects remaining to be modeled computationally. The bulky arrows on the right exemplify

ultimate product results obtained by running the IGSM. GDP , gross domestic product.

Source: Prinn , Proc.

Natl. Acad. Sci., 2013, Vol. 110, pp. 3673-3680; http://www.pnas.org/content/110/suppl.1/3673.full.pdf+html?with-ds=yes.

The IGSM used in Prinn

is an updated and more comprehensive version of earlier ones such as that

described in A. P. Sokolov et al., J. Climate, 2009, vol. 22, pp. 5175-5204. Human activities involving such factors as

economic pursuits that depend on energy, land use and its changing pattern that

can store or release GHGs, and harvesting of fossil fuels to furnish energy for

the economy are encompassed in a computational module external to the Earth

System.

The Human Activity module, given the

name Emissions Prediction and Policy Analysis (EPPA, see the schematic),

computationally accounts for most human activities that produce GHGs and

consume resources from the Earth System.

As shown in the graphic, EPPA inputs GHGs and an accounting for land use

and its transformations to the Earth System, and receives outputs from it such

as agriculture and forestry, precipitation and terrestrial water resources, and

sea level rise, among others.

The graphic

includes a complete listing of all important GHGs, as inputs from the Human

Activity module to the Earth System. CO2

is the principal, but not the only, GHG arising from human activity. Many of the others are important because,

although their concentrations in the atmosphere are relatively low, their

abilities to act as GHGs, molecule for molecule, are much greater than that of

CO2, and, like CO2, they remain resident in the

atmosphere for long times.

Overall, Prinn states that the

full-scale computational system is too demanding for even the largest

computers. Therefore, depending on the

objective of a given project, reduced versions of various modules are employed. Each module has been independently tested and

validated to the greatest extent possible before being used in a project

calculation.

The IGSM computations are initiated

using input values for important parameters that are set using a random selection

method. Ensembles of hundreds of such

runs, each providing output results sought for the project, are aggregated to

provide probabilities for outcomes. Such

assessments of probability for outcomes are a hallmark of contemporary climate

projections; for example the IPCC likewise characterizes its statements of

projected climate properties in probabilistic terms.

© 2013 Henry Auer

No comments:

Post a Comment